The Justice League is a group of superheroes, including but not limited to Batman and Wonder Woman, attempting to save the artificial fictional world from the super villain Darkseid. The Algorithmic Justice League (AJL) founded in 2016, also aspires for a more just and equitable world, particularly in how artificial intelligence (AI) is used. The AJL focuses on promoting four key principles in fighting algorithmic control, namely, affirmative consent, meaningful transparency, continuous oversight and accountability, and actionable critique.

Although labeled “artificial intelligence” it is anything but artificial; as Kate Crawford writes in The Atlas of AI, “AI is both embodied and material.” It is this materiality of AI that has seen the emergence of control over workers, a form of control that we define as algorithmic control. In this article we conceptualize algorithmic control and explore how it affects workers and how they are beginning to resist. We conclude with some suggestions on worker-led resistance to algorithmic control.

What is an algorithm?

Across the globe, various stakeholders (including governments, academics, and activists) are grappling with how systems of algorithmic control are reshaping the world. The algorithm exists in an immaterial form, despite the fact that its existence and use have material consequences. An algorithm is a process or set of rules to be followed in calculations or other problem-solving operations, especially by a computer. The rise of technologies like cloud computing, which allows the delivery of computing services over the Internet (e.g., Amazon Web Services), enables organizations and businesses to automate certain aspects of their operations. Some argue that algorithms are neutral. However, a growing body of work (see, for example, Ruha Benjamin, Race After Technology) shows how algorithms can be biased and discriminatory in practice as they are coded by human programmers based on a set of norms and instructions. If bias is fed into them, then the algorithms automate existing patterns of discrimination. This is especially true in the current ecosystem of Big Tech companies that are overwhelmingly run by white men. In her book Algorithms of Oppression Safiya Noble discusses how Google’s algorithms have been discriminatory against Black women and girls.

Algorithmic control - Reality

The algorithm has deepened control over work to a greater extent than ever before in the history of industrialization. It establishes control and profit maximization through the algorithm in the heart of the labor process. It is an example of what Marx called “valorization in command.” The algorithms are designed to measure the workers’ speed in completing the task. If platform workers do not perform the task according to the standards of the algorithm, management has the power to immediately alter the remuneration earned by the workers and/or dismiss (deactivate or disconnect their account) them from the platform. In December 2020, Uber drivers in Johannesburg, South Africa launched a protest by disabling the Uber app and not accepting requests for rides. Among the complaints of these drivers were the obscure way in which their accounts were blocked by Uber and the inequitable way in which the fees earned by drivers were unilaterally decided and implemented by Uber.

In The Uberisation of Work Edward Webster shows how, for companies like Uber, algorithms enable them to concentrate on high value-adding activities whilst simultaneously divesting from mainstream employment liabilities through the use of technology-enabled outsourcing and subcontracting practices. These companies display monopolistic tendencies, and bypass standard corporate governance as well as standard employment practices.

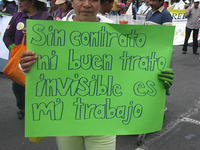

What is distinctive about algorithmic control is that it is invisible and inaccessible. In general, platform workers do not have access to the algorithm’s source code. As the 2021 International Labour Organization (ILO) World Employment and Social Outlook report explains, accessing the underlying source code of an algorithm is the only way to determine whether that algorithm is producing outcomes that are anti-competitive and/or discriminatory. Because of trade secrecy laws and intellectual property rules at the level of the World Trade Organization, it is difficult to access this source code. The ILO further argues that information asymmetry augments the imbalance of power between algorithm owners and algorithm subjects.

Algorithmic control - Resistance

Although algorithmic control appears insurmountable, employees are themselves using algorithms to fight for control over their working conditions. Following negotiations, Spain has passed the Rider Law that recognizes delivery riders as employees of digital platforms. Furthermore, it is mandatory for digital platforms to be transparent about how their algorithms affect working conditions. It is also critical to remember consumers when discussing algorithmic control and the resistance against it. Consumers have also become producers of value through the algorithms’ mining of personal data. It could be argued that the consumer of Big Tech performs unpaid labor when making use of various platforms. This renders the consumers’ position to be closer to that of the workers than to that of the managers of Big Tech firms.

When analyzing the experimentation around the resistance to these different manifestations of algorithmic control by Big Tech companies, researchers also need to consider the importance of space. Systems of algorithmic control have their own particularities across different spatial contexts. It is important to consider these differences beyond the national level. Given persisting inequalities that exist between the Global North and the Global South, both contexts bring to the fore important particularities that deepen conversations around resisting various forms of algorithmic control. It is critical to consider this when we think of how to formulate a conceptualization of a future of work that prioritizes the worker over the corporation. Cathy O’Neil captures this best in Weapons of Math Destruction when she writes that:

“Big Data processes codify the past. They do not invent the future. Doing that requires moral imagination, and that’s something only humans can provide. We have to explicitly embed better values into our algorithms, creating Big Data models that follow our ethical lead. Sometimes that will mean putting fairness ahead of profit.”

Sandiswa Mapukata, University of the Witwatersrand, South Africa <sandiswa.mapukata@wits.ac.za>

Shafee Verachia, University of the Witwatersrand, South Africa <mohammed.verachia@wits.ac.za>

Edward Webster, University of the Witwatersrand and past president of ISA Research Committee on Labour Movements (RC44) <Edward.Webster@wits.ac.za>